In the world of computers and networks, two key metrics define performance: latency and throughput. These terms are often used interchangeably, but they represent distinct concepts. Understanding these core metrics enable network administrators and users to troubleshoot performance issues, enhance network reliability, and deliver seamless digital experiences across a multitude of applications and industries.

What is Latency?

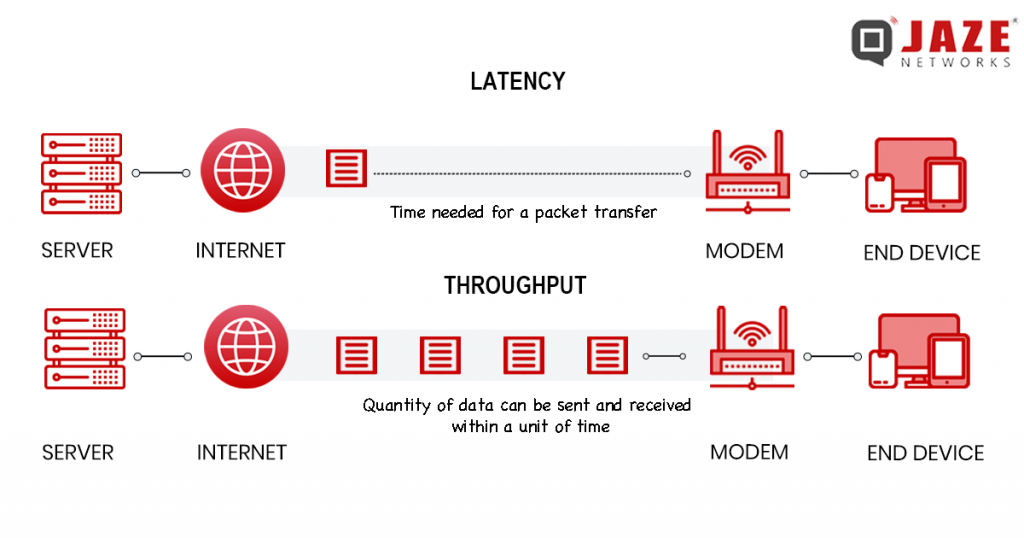

Latency, often referred to as ping, is the time it takes for data to travel from its source to its destination. It’s essentially the delay incurred during data transmission. Imagine sending a packet of information from one point to another; the time it takes for that packet to reach its destination is latency.

Understanding Throughput

Throughput, on the other hand, measures the rate at which data is successfully transmitted from one point to another over a network within a specific timeframe. It represents the amount of data that can be transferred successfully in a given period. In simpler terms, throughput is the capacity of the network to carry data.

The Relationship Between Latency and Throughput

While both latency and throughput are crucial for network performance, they are inversely proportional. This means that increasing one typically comes at the expense of the other. For instance, if you prioritize high throughput (more data transfer), you might experience higher latency (slower data transmission speed) due to network congestion. Conversely, optimizing for low latency (faster data transmission speed) might limit throughput (data transfer rate).

Impact on Network Performance

Both latency and throughput significantly impact various network applications. Here’s a breakdown of their influence:

- Latency-sensitive applications: Applications like online gaming, video conferencing, and real-time stock trading require low latency for smooth performance. Even minor delays can disrupt the user experience.

- Throughput-sensitive applications: Applications that involve large data transfers, such as file downloads, video streaming, and backups, benefit from high throughput. Faster data transfer rates expedite these tasks.

Optimizing Network Performance

The ideal scenario involves achieving a balance between high throughput and low latency. This can be accomplished through various network optimization techniques, such as:

Network upgrades: Investing in higher-bandwidth infrastructure, such as fiber optic cables, can significantly boost throughput.

Traffic shaping: Prioritizing critical traffic and regulating non-essential traffic can help minimize congestion and maintain low latency.

Content delivery networks (CDNs): Distributing content across geographically dispersed servers can reduce latency for users by bringing data physically closer to them.

Jaze Network’s ISP Management Software offers a powerful solution for understanding your subscriber’s usage patterns. So that you can take decisions on optimising your network for the improved throughput and lower latency. With Jaze ISP Manager’s AAA, you can prioritize critical traffic in combination with BNGs and ensure a seamless user experience.